Weight SVD¶

Context¶

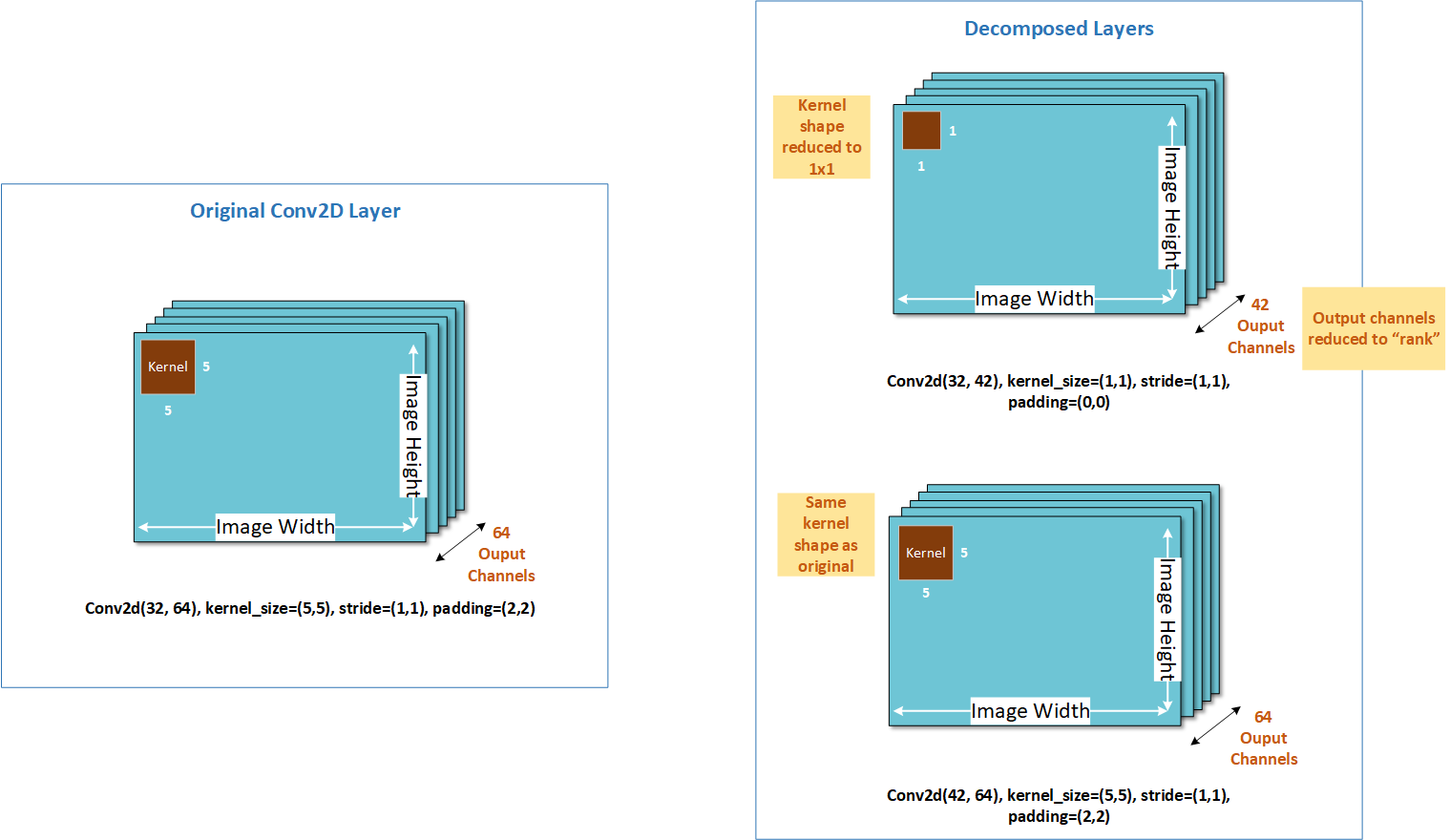

Weight singular value decomposition (Weight SVD) is a technique that decomposes one large layer (in terms of multiply-accumulate (MAC) or memory) into two smaller layers.

Consider a convolution (Conv) layer with the kernel (m, n, h, w) where:

m is the input channels

n the output channels

h is the height of the kernel

w is the width of the kernel

Weight SVD decomposes the kernel into one of size (m, k, 1, 1) and another of size (k, n, h, w), where 𝑘 is called the rank. The smaller the value of k, larger the degree of compression.

The following figure illustrates how weight SVD decomposes the output channel dimension. Weight SVD is currently supported for convolution (Conv) and fully connected (FC) layers in AIMET.

Workflow¶

Code example¶

Setup¶

Compression using Weight SVD¶

Compressing using Weight SVD in auto mode

Compressing using Weight SVD in manual mode with multiplicity = 8 for rank rounding

API¶

Top-level API for Compression

- class aimet_torch.compress.ModelCompressor[source]

AIMET model compressor: Enables model compression using various schemes

- static ModelCompressor.compress_model(model, eval_callback, eval_iterations, input_shape, compress_scheme, cost_metric, parameters, trainer=None, visualization_url=None)[source]

Compress a given model using the specified parameters

- Parameters:

model (

Module) – Model to compresseval_callback (

Callable[[Any,Optional[int],bool],float]) – Evaluation callback. Expected signature is evaluate(model, iterations, use_cuda). Expected to return an accuracy metric.eval_iterations – Iterations to run evaluation for

trainer – Training Class: Contains a callable, train_model, which takes model, layer which is being fine tuned and an optional parameter train_flag as a parameter None: If per layer fine tuning is not required while creating the final compressed model

input_shape (

Tuple) – Shape of the input tensor for modelcompress_scheme (

CompressionScheme) – Compression scheme. See the enum for allowed valuescost_metric (

CostMetric) – Cost metric to use for the compression-ratio (either mac or memory)parameters (

Union[SpatialSvdParameters,WeightSvdParameters,ChannelPruningParameters]) – Compression parameters specific to given compression schemevisualization_url – url the user will need to input where visualizations will appear

- Return type:

Tuple[Module,CompressionStats]- Returns:

A tuple of the compressed model, and compression statistics

Greedy Selection Parameters

- class aimet_torch.common.defs.GreedySelectionParameters(target_comp_ratio, num_comp_ratio_candidates=10, use_monotonic_fit=False, saved_eval_scores_dict=None)[source]

Configuration parameters for the Greedy compression-ratio selection algorithm

- Variables:

target_comp_ratio – Target compression ratio. Expressed as value between 0 and 1. Compression ratio is the ratio of cost of compressed model to cost of the original model.

num_comp_ratio_candidates – Number of comp-ratio candidates to analyze per-layer More candidates allows more granular distribution of compression at the cost of increased run-time during analysis. Default value=10. Value should be greater than 1.

use_monotonic_fit – If True, eval scores in the eval dictionary are fitted to a monotonically increasing function. This is useful if you see the eval dict scores for some layers are not monotonically increasing. By default, this option is set to False.

saved_eval_scores_dict – Path to the eval_scores dictionary pickle file that was saved in a previous run. This is useful to speed-up experiments when trying different target compression-ratios for example. aimet will save eval_scores dictionary pickle file automatically in a ./data directory relative to the current path. num_comp_ratio_candidates parameter will be ignored when this option is used.

Configuration Definitions

- class aimet_torch.common.defs.CostMetric(value)[source]

Enumeration of metrics to measure cost of a model/layer

- mac = 1

Cost modeled for compute requirements

- Type:

MAC

- memory = 2

Cost modeled for space requirements

- Type:

Memory

- class aimet_torch.common.defs.CompressionScheme(value)[source]

Enumeration of compression schemes supported in aimet

- channel_pruning = 3

Channel Pruning

- spatial_svd = 2

Spatial SVD

- weight_svd = 1

Weight SVD

Weight SVD Configuration

- class aimet_torch.defs.WeightSvdParameters(mode, params, multiplicity=1)[source]

Configuration parameters for weight svd compression

- Parameters:

mode (

Mode) – Either auto mode or manual modeparams (

Union[ManualModeParams,AutoModeParams]) – Parameters for the mode selectedmultiplicity – The multiplicity to which ranks/input channels will get rounded. Default: 1

- class AutoModeParams(rank_select_scheme, select_params, modules_to_ignore=None)[source]

Configuration parameters for auto-mode compression

- Parameters:

rank_select_scheme (

RankSelectScheme) – supports two options greedy and tarselect_params (

GreedySelectionParameters) – Params for greedy/TAR comp-ratio selection algorithmmodules_to_ignore (

Optional[List[Module]]) – List of modules to ignore (None indicates nothing to ignore)

- class ManualModeParams(list_of_module_comp_ratio_pairs)[source]

Configuration parameters for manual-mode weight svd compression

- Parameters:

list_of_module_comp_ratio_pairs (

List[ModuleCompRatioPair]) – List of (module, comp-ratio) pairs

- class Mode(value)[source]

Mode enumeration

- auto = 2

Auto mode

- manual = 1

Manual mode