Low-Power Blockwise Quantization (LPBQ)¶

Qualcomm® AI Engine Direct supports an alternative to blockwise quantization called Low-Power Blockwise Quantization (LPBQ).

Note

To read about generic blockwise quantization, see Blockwise Quantization

In LPBQ, blockwise encodings at a lower bit width are adjusted such that they lie on a common higher-bit-width per-channel grid.

Following are the benefits of LPBQ over Blockwise Quantization (BQ):

Enables models to run on existing per-channel kernels

Generated encodings are storage-efficient than BQ

Qualcomm® AI Engine Direct has the following restrictions on LPBQ:

Blockwise quantization runs on weight (not activation) quantizers only

Block size must be set to one for the output channel dimension

Input channel dimension must be divisible by Block size

Apply LPBQ¶

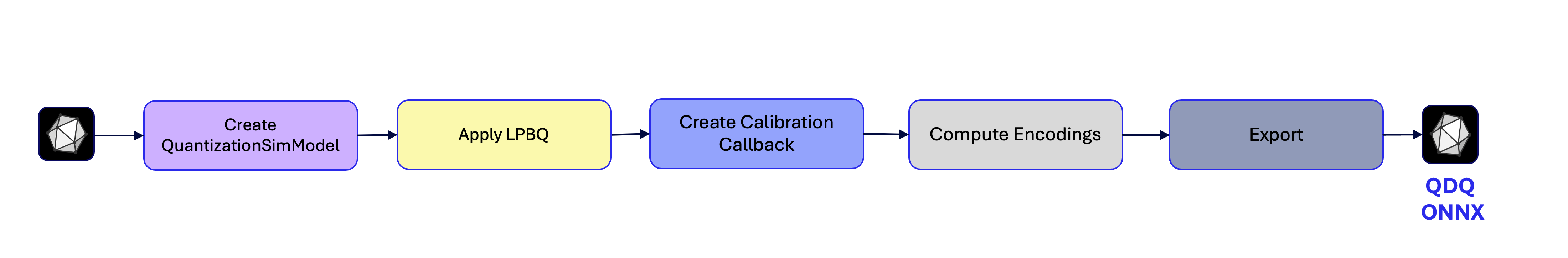

This section walks through how to enable LPBQ in Post-Training Quantization workflow.

LPBQ workflow looks like the following:

Create QuantizationSimModel

Enable LPBQ for select operations (additional step on top of Post-Training Quantization workflow

Create a calibration callback to be used for computing quantization parameters

Compute encodings

Evaluation

Export the model

Apply LPBQ on select set of modules in PyTorch or operations in ONNX with set_grouped_blockwise_quantization_for_weights().

This function can be called multiple times to set different LPBQ configuration for target set of modules or operations.

# 1. Create QuantizationSimModel

# ...

from aimet_torch.quantsim.config_utils import set_grouped_blockwise_quantization_for_weights

# 2. Apply LPBQ

set_grouped_blockwise_quantization_for_weights(

sim=quantsim,

arg=[torch.nn.Linear],

bitwidth=4,

symmetric=True,

decompressed_bw=8,

block_size=64,

block_grouping=-1,

)

# Continue with calibration

# 1. Create QuantizationSimModel

# ...

from aimet_onnx.quantsim import set_lpbq_for_params

# 2. Apply LPBQ

set_lpbq_for_params(

sim=quantsim,

bitwidth=4,

block_size=64,

op_types=("Gemm", "MatMul", "Conv"),

nodes_to_exclude = ['conv1', 'linear10']

)

# Continue with calibration

API¶

Top-level API to configure LPBQ quantization

- aimet_torch.v2.quantsim.config_utils.set_grouped_blockwise_quantization_for_weights(sim, arg, bitwidth, symmetric, decompressed_bw, block_size, block_grouping=-1)[source]

Set weight parameter quantizers of modules to grouped blockwise.

- Parameters:

sim (

QuantizationSimModel) – Quantsim to set weight quantizers forarg –

Argument determining which modules to set. This can consist of either:

A list of torch.nn.Module types, in which case all modules whose type is in the list will be set

A list of torch.nn.Modules, in which case all modules in the list will be set

A callable function which takes a torch.nn.Module as input and returns True if the module is to be set, False otherwise

bitwidth (

int) – Bitwidth for affine quantizationsymmetric (

bool) – True if affine quantization is symmetric, False otherwisedecompressed_bw (

int) – Decompressed bw for grouped block quantizationblock_size (

Union[int,Tuple[int,...]]) –Block size for affine quantization. This can be an array in which case all layers identified by arg must have weight shapes compatible with the array length, or can be an integer value, in which case the block size will be applied to the weight’s in_channels dimension and per channel will be used for the weight’s out_channels dimension.

A block size value of -1 for a particular dimension is equivalent to a block size equal to the size of that particular dimension.

block_grouping (

Union[int,Tuple[int,...]]) –Block grouping for grouped block quantization. This can be an array in which case all layers identified by arg must have weight shapes compatible with the array length, or can be an integer value, in which case the block grouping will be applied to the weight’s in_channels dimension, and no other dimensions will experience block grouping.

A block grouping value of -1 for a particular dimension is equivalent to a block grouping equal to the number of blocks for that particular dimension.

Examples

>>> # Assume 'sim' is a QuantizationSimModel object imported from aimet_torch.v2.quantsim >>> # Allows setting of all Linear and Conv weight quantizers to LPBQ with block_size 64 in the input_channels dimension: >>> set_grouped_blockwise_quantization_for_weights(sim=sim, ... arg=[torch.nn.Linear, torch.nn.Conv2d], ... bitwidth=4, ... symmetric=True, ... decompressed_bw=8, ... block_size=64, ... block_grouping=-1) >>> # Allows setting of specific model layers' weight quantizer to LPBQ with block_size 64 in the input_channels dimension: >>> set_grouped_blockwise_quantization_for_weights(sim=sim, ... arg=[sim.model.conv2, sim.model.linear1], ... bitwidth=4, ... symmetric=True, ... decompressed_bw=8, ... block_size=64, ... block_grouping=-1) >>> # Allows setting of only Convolution layers with input channels dim == 128 to LPBQ with block_size 64 in the input_channels dimension: >>> set_grouped_blockwise_quantization_for_weights(sim=sim, ... arg=lambda module: isinstance(module, torch.nn.Conv2d) and module.weight.shape[1] == 128, ... bitwidth=4, ... symmetric=True, ... decompressed_bw=8, ... block_size=64, ... block_grouping=-1)

This utility enables you to configure quantized layers to use grouped blockwise quantization by supplying a decompressed_bw, block_size, and block_grouping. Similar to set_blockwise_quantization_for_weights(), block_grouping can be a single value. In this case the input_channel’s dimension is assigned the value, and all other dimensions are assigned a value of one.

Different layers can have different numbers of blocks for the input channels dimension for the same block size. If you assign -1 as the single block_grouping value, the input channels dimension automatically uses a block_grouping value equal to the number of blocks in any affected layer. This enbles you to configure all affected layers to LPBQ quantization with a single API call.

- aimet_onnx.quantsim.set_lpbq_for_params(sim, bitwidth, block_size, *, op_types=None, nodes_to_exclude=None, nodes_to_include=None, strict=None)[source]

Set weight quantizers of specified nodes to use low-power blockwise quantization.

This function is overloaded with the following signatures:

- aimet_onnx.quantsim.set_lpbq_for_params(sim, bitwidth, block_size, *, nodes_to_include=None)[source]

- Parameters:

sim (QuantizationSimModel) – Quantsim to set weight quantizers for

bitwidth (int) – Compressed bitwidth for lpbq quantization

block_size (int) – Block size for affine quantization. The block size will be applied to the weight’s input features dimension, while per-channel will be used for the weight’s output features dimension

nodes_to_include (Set[str]) – Set of onnx node names to include for blockwise weight quantization.

- aimet_onnx.quantsim.set_lpbq_for_params(sim, bitwidth, block_size, *, op_types=None, nodes_to_exclude=None, strict=False)[source]

- Parameters:

sim (QuantizationSimModel) – Quantsim to set weight quantizers for

bitwidth (int) – Compressed bitwidth for lpbq quantization

block_size (int) – Block size for affine quantization. The block size will be applied to the weight’s input features dimension, while per-channel will be used for the weight’s output features dimension

op_types (Union[str, Set[str]]) – Operator types for which to enable grouped blockwise weight quantizaiton

nodes_to_exclude (Set[str]) – Set of onnx node names to exclude from blockwise weight quantization.

strict (bool) – If False, only enable blockwise quant for layers with dimensions evenly divisible by block_size. If True, throw an error for layers with incompatible shapes.

Examples

>>> sim = QuantizationSimModel(...) >>> set_lpbq_for_params(sim, bitwidth=4, block_size=64, op_types={"Gemm", "MatMul", "Conv"}) >>> # or >>> set_lpbq_for_params(sim, bitwidth=4, block_size=64, nodes_to_include={"/lm_head/MatMul", ...})